Enough – Ep. 1.5

Archived series ("Inactive feed" status)

When?

This feed was archived on January 29, 2022 20:05 (

Why? Inactive feed status. Our servers were unable to retrieve a valid podcast feed for a sustained period.

What now? You might be able to find a more up-to-date version using the search function. This series will no longer be checked for updates. If you believe this to be in error, please check if the publisher's feed link below is valid and contact support to request the feed be restored or if you have any other concerns about this.

Manage episode 191448999 series 1749213

This episode was inspired by an article I read about the race to develop artificial intelligence. It reminded me of a story that I heard about Jospeh Heller, which got me thinking about the concept of enough.

References

Tech Giants Are Paying Huge Salaries for Scarce A.I. Talent

cowboys v. spacemen (Boulding’s original paper here)

freedom, books, flowers, and the moon

Transcript

Hi, this is Jaime Escuder. And welcome to another episode of None Sense.

I read a headline this morning and it reminded me of something, a story that I once heard about Kurt Vonnegut. And so, I’m gonna talk to you about all of that.

Here’s the headline, “Tech Giants Are Paying Huge Salaries for Scarce A.I. Talent: Nearly all big tech companies have an artificial intelligence project. And they are willing to pay experts millions of dollars to help get it done.”

Okay. And here is the Vonnegut story. This is Vonnegut speaking.

“True story, Word of Honor: Joseph Heller, an important and funny writer, now dead, and I were at a party given by a billionaire on Shelter Island. I said, ‘Joe, how does it make you feel to know that our host only yesterday may have made more money than your novel Catch 22 has earned in its entire history?’ And Joe said, ‘I’ve got something he can never have.’ And I said, ‘What on earth could that be, Joe?’ And Joe said, ‘The knowledge that I’ve got enough.'”

This podcast today is going to be about the idea of enough.

Enough is something that people really don’t do. We are avaricious and greedy. Most of us are demented, as Walt Whitman said, “with the mania of owning things.” This is why Imelda Marcos had over 1,200 pairs of shoes. This is why a guy named Dan Burnham spent $248,000 on a tree house for his grandkids. “Adorable and worth every penny,” said Mr. Burnham.

And we are rapacious.

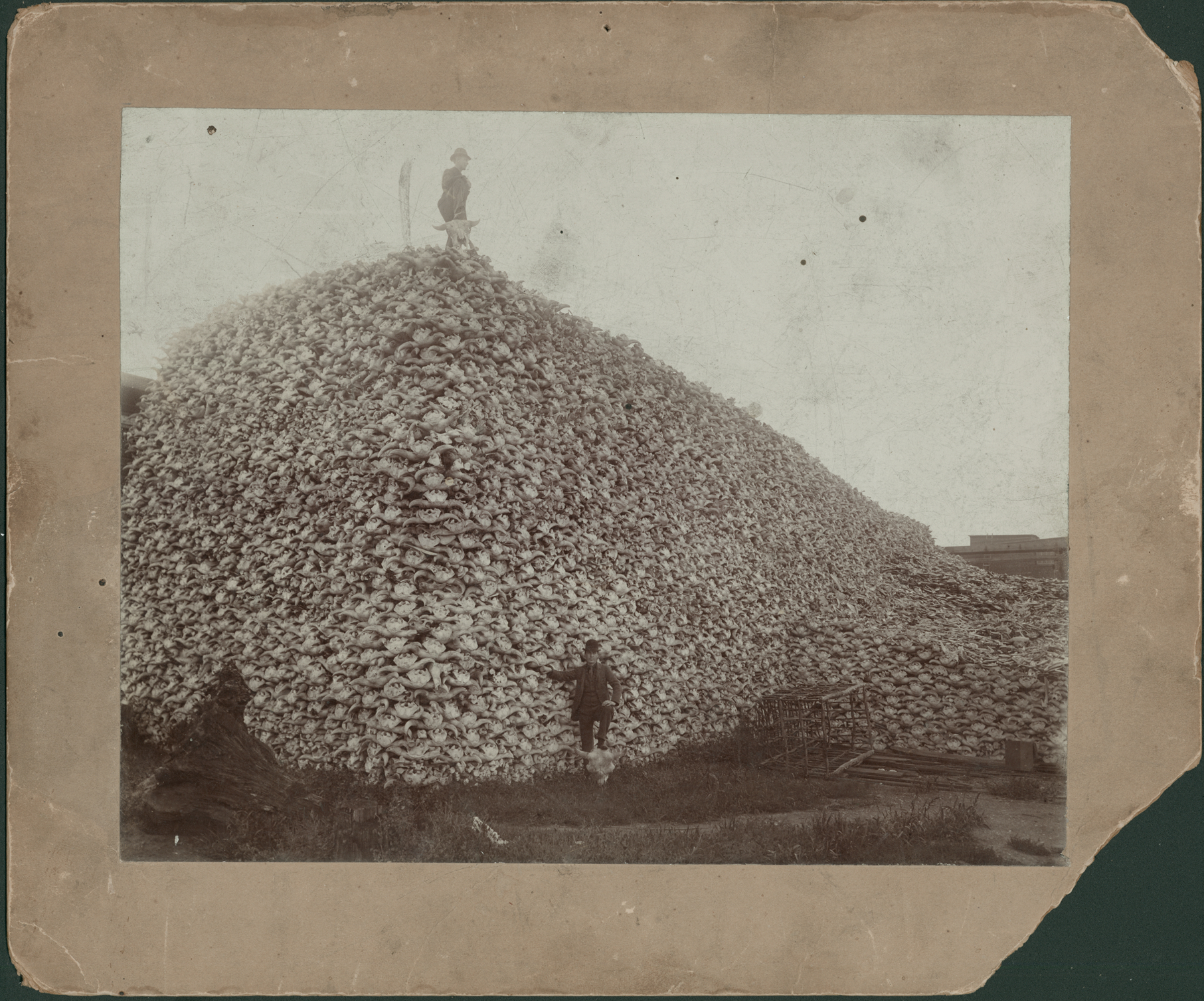

This is a thing that we do: we develop technologies, and then we abuse them. There used to be, think about this, 30 million buffalo in North America. Do you know how many un-hybridized buffalo there are in America right now? There’s a general population of 500,000. So, down from 30 million to 500,000. That’s an astonishing decline. [98.4%] But of those, you know how many are un-hybridized, meaning the actual buffalo that were here originally? 8,000. [0.026%]

Here’s another example. Did you know that the United States used to be the largest producing caviar exporter in the world? And that the caviar was of exceptionally high quality? This is from a website I found on the history of caviar. This is the quote, “There was so much American caviar being produced in North America at the time (so around the turn of the 20th century) that bars would serve the salty delicacy to encourage more beer drinking, as peanuts are served today.” At the turn of the 19th century, there was more caviar going to Europe from North America than from Russia. At that time, there were roughly 4 million pounds of sturgeon being harvested from the Great Lakes per year and now, virtually gone.

I’m mentioning this because it’s the concept…it’s the idea that we don’t accept the concept of enough, as Joseph Heller did, that causes us to do things like fracking. And it’s also the idea that we don’t accept the fact that we have limitations, that we cannot be trusted with these technologies as we develop them that causes us to create things like nuclear weapons, which we then do things like leave them unguarded. They’ve been flown across the country by accident. [Same incident.] They’ve been overbuilt. We have…there’s something like 4,000 nuclear weapons in the American arsenal.

https://twitter.com/realDonaldTrump/status/811977223326625792

[For the record, that tweet above is sheer madness. It’s also missing a period; can you believe it?]

And more than that, not only have we built too many of them, we built far too powerful of them. The bomb that was dropped in Hiroshima killed 80,000 people instantly. And yet there was a bomb in Arkansas, and there’s a show about this, an American experience called “Command and Control” where this nuclear weapon almost detonated in Arkansas. And it was 600 times more powerful than the Hiroshima bomb. Just think about the folly of even developing one such weapon, let alone thousands of those weapons, and that’s what we’ve done.

And why am I thinking about this in relation to this article I read about tech companies just in a race to develop artificial intelligence? And the reason is, this is another technology, even more so possibly, some people think that nuclear weapons that is completely capable of destroying us. And rather than acting with circumspection and hesitation and restraint, you have one company. Google’s Deep Mind, which is their A.I. arm, paying on average $375,000 per employee to develop this stuff.

Now, I know that there are potentially lots of wonderful benefits to A.I. There are medical benefits. There’s the whole self-driving cars thing. And most importantly, and let’s not forget this, there is the fact that it’s going to make some people incredibly rich. But there are also risks. For example, there’s a famous example given by a guy named Nick Bostrom who’s done a lot of thinking about the risks of A.I. It’s called the paperclip maximizer example.

And he wrote a paperback in 2003, in which he said this, “It seems perfectly possible to have a superintelligence,” and that’s what we’re talking about with the development of A.I. because we have A.I. now. It’s in our phones. For example, when you take a picture of someone on your phone and there’s that little box that identifies the person’s face, well that’s A.I. at work. We already have A.I. that you can literally hold in the palm of your hand.

But what these companies and that’s not enough. I get that’s the point of what I’m saying. We already have A.I. but they have decided that that’s not enough. They want super intelligent A.I. And Bostrom says, “It seems perfectly possible to have a superintelligence whose sole goal is something completely arbitrary, such as to manufacture as many paperclips as possible. And who would resist with all its might any attempt to alter this goal with the consequence that it starts transforming first all of earth, and then increasing portions of space into paperclip manufacturing facilities.”

In this example, let’s say we think we’re doing something innocuous. We just wanna test the A.I., and we decide to give it the task of manufacturing paperclips. And it determines, “Great, I’m gonna make as many as possible.” And I’m not a scientist and I’m not a biologist. I don’t know. I’m just making stuff up here because I’m not super intelligent. But it could easily decide, “You know, the best atmosphere for paperclip manufacture is a carbon-rich atmosphere. There’s too much oxygen in this atmosphere.” So, it acts to cut down all the trees. Destroy all the trees, or it decides, “People are actually in the way of my paperclip production. I need to get rid of them,” or it decides, “We need lots of water to make paperclips.” And so it starts polluting the water. We can’t even predict what it might do.

When do we ever stop and acknowledge the fact that we actually cannot be trusted with technology? Because if we could, then maybe, there would still be, some buffalo around. When do we stop and say the average lifespan right now is 80 years and that’s enough? When do we stop and say, “It’s okay for me to drive myself? That’s enough.” Is it not reasonable to say that any technology that has the potential of destroying all civilization? That’s too much.

There’s a guy named Kenneth Boulding. He was an economist. He was a lot of things. He was a real polymath. He was an economist, and a poet, and everything. In 1966, he wrote a paper called “The Economics of the Coming Spaceship Earth.” He said, “We need to start rethinking about our planet, and we need to re-imagine it from an open system to a closed system.” And he called these the differences between a cowboy economy and a spaceman economy. He said, “The cowboy economy was symbolic of the illimitable plains, and also associated with reckless, exploitative, romantic, and violent behavior. But the closed economy of the future, which we can call the spaceman economy, we have to think about the earth as becoming a single spaceship without unlimited reservoirs of anything. Either for extraction or for pollution and in which, therefore, man must find his place in a cyclical, ecological system.”

Before we develop certain technologies, let’s say, for example, fracking. It was okay to dig around in the earth and take out as much as we could, because we couldn’t possibly take everything or, for example, before we developed the rifle. It was okay to hunt as many buffalo as possible because it was just not possible to kill 30 million individual buffalo. But when the railroads came, and the rifle came, and all of these things came, it was possible and we did it.

As we stand now on the cusp of another thing that we’re frantically developing, A.I., and we’re not even pausing at all to look at how it potentially really could destroy us all. I wish there was more discussion of what enough is. Oscar Wilde said, “With freedom, books, flowers and the moon, who could not be happy?”

Unfortunately, it seems like, there’s a lot of people. And I think the reason is they’re not looking at the things that they have already. Because if they did, they would see that really, it is enough. And the other thing is they only look at the possible benefits of the thing, never considering that the negatives are real and also likely.

I wanna go back to Nick Bostrom for a minute. He has this other interesting idea called the great filter. He was thinking about why we have not found any signs of extraterrestrial life. And he has a simple idea. And the idea is the reason we haven’t found any is because none of it exists. And the reason it doesn’t exist is because at some point in the development of all intelligent life, it’s possible that they must invent something that leads to their destruction. “It’s not far-fetched,” he says, “to suppose that there might be some possible technology which is such that A, virtually all sufficiently advanced civilizations eventually discover it and B, its discovery leads almost universally to existential disaster.”

Now, it’s my belief that most likely the discovery of nuclear weapons is that thing for mankind. I think it’s just incredibly lucky that in the decades since we discovered nuclear weapons, there has not been some catastrophic, cataclysmic thing that’s happened, that’s led to just the destruction of all life. If it’s not nuclear weapons, there is at least the possibility that it is artificial intelligence, a thing that we are rushing headlong into the development of. And maybe, just maybe, the mere possibility that it is that ought to be enough for us to say enough.

Thank you as always for listening.

12 episodes