VR and 360 Video

Manage episode 309677228 series 3037997

1. What is VR?

I’m pretty sure by the time you clicked play on this video, you already have a pretty good idea as to what VR is, so I’ll make this as quick as possible so we can get into the good tech stuff.

I’m not gonna focus on CGI based VR nor things like panoramic stills or really hardcore things like stereoscopic VR. To keep this episode manageable, we’re gonna stick with mono live action based VR. Wanna get you home in time for supper.

VR, virtual reality, is an immerse visual and audio experience, sometimes consisting of live action and many times being partly computer blended. The viewer – and as of today, the person wearing the headset – is placed within an environment and can interact to some degree with that environment.

There is a fine line between VR and AR – augmented reality. AR, as the name implies, augments our existing environment – Iron Man’s Heads up display or HUD in his helmet is a very basic example of this. Virtual reality transports you somewhere different – even if it’s just your neighbor’s house …when they’re home, of course.

VR can be 360 degrees, or, in some cases can be less than that. – not only does this help the creators focus the story within a certain visual space, but it also helps hide many of the tricks used in production to get the shot.

I won’t bore you with talking about the bajillions of dollars poured into the industry, nor the marketing fluff surrounding VR, and we’ll just get to the tech goodness of the medium.

2. How do I shoot VR?

So ya wanna shoot VR, do ya? Break out that credit card. You’re gonna need a lot of cameras. At least 3-4 if using fisheye lenses, 5 minimum, 6 or more with more standard lenses. These cameras also have to be in a camera rig that positions your camera du jour “just so”, as to allow for the maximum amount of coverage with just enough overlap so you can stitch the angles together in post.

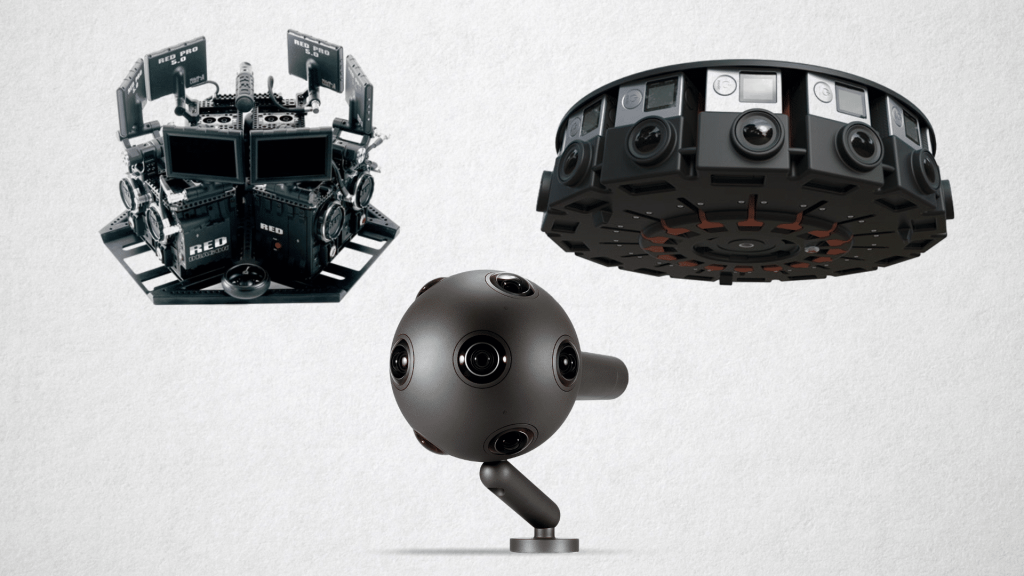

Quite frankly, you can use just about any camera out there, but not all VR rigs fit all cameras. Go Pros and Nikons are common weapons of choice, and higher end folks will use a batch of RED cameras, or the 16 camera rig by Google Jump or the all in one Nokia Ozo. Jaunt also has a fantastic rig, but their tech and workflow are a bit different than what we’ll be covering today – but check them out.

You also need to decide if you’re shooting cylindrical or spherical. Meaning, is most of your action happening in the landscape around you, or, above and below you? If it’s more of a limited field of view, you can forgo the cameras at the sky and floor of your shot, and instead use those cameras to get increased resolution within the landscape of your shot, thus shooting cylindrical. This also gives you a slight advantage if you need to hide the tripod, or lights from above in your shot.

Otherwise, when you absolutely, positively need to get every single thing in the shot, you go spherical.

You’re also gonna need a healthy serving of fast, quality memory cards for your cameras. You can’t afford to save a few bucks on substandard cards and lose data…1 lost shot and the entire sequence is, well,…shot. You’ll also need card readers, camera batteries, and storage space. After all, you’re recording and storing an exponentially larger amount of data.

OK, so you’ve got your rig, you’ve got your cameras, you’ve got your lighting magically hidden so it’s not in the shot, and you’re ready to record. Not so fast there, Lawnmower Man. A few things you need to know.

First, make sure all cameras are recording in the same way. Meaning – same codec, same frame size, and frame rate, same exposure, same white balance, etc. Try and shoot in a flatter look, too – so when you do start color grading, it’ll be easier to balance the cameras in post. Lastly, if you can, jam sync all of the cameras for common time code. Any difference between the cameras, from a visual or metadata standpoint, will not be easily solved in post. Seriously, spend the time and get it right on set.

There are some basic guidelines for shooting. As we’re focusing on tech, I’ll skip the more artistic guidelines.

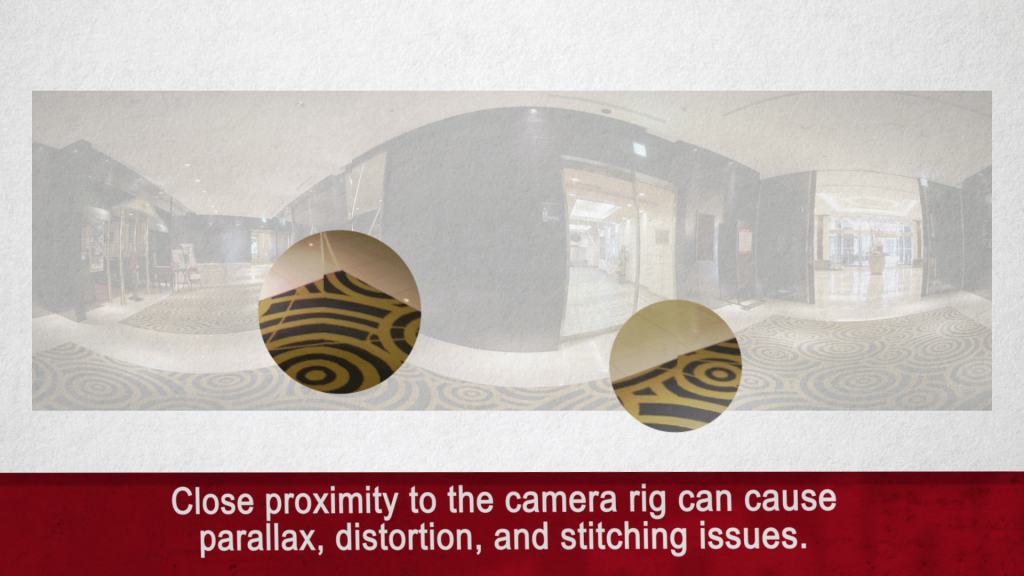

First: Talent: Don’t get to close to the camera.

Anyone closer than 6 feet to the camera rig can get really distorted. Be careful.

Also, the knee-jerk reaction is to try and record your talent in stereo or with surround sound mics. Don’t . Record the talent in mono as you’ve normally done, and manipulate it in post. It also somewhat future proofs you as VR technology begins to more easily incorporate perceptual audio into post workflows and presentation technology.

3. How do I edit VR?

Well, just like, and I can’t believe I’m saying this, regular old 2D Video, the line between production and post has blurred. Post is starting on location, and VR is no exception.

You’ll need to get the data off of the cards, hence the reason I mentioned needing a bunch of card readers. You’ll want to be incredibly organized with your data, including naming conventions, and organizing by card and take, and using software solutions to assist.

Kolor is a very popular option – they specialize in data wrangling for VR and also handle some stitching and other post processes for VR productions.

Whatever solution you use, make sure the solution has checksumming built in. You can’t afford to have a bad card copy from a Finder or Explorer drag and drop.

You’ll need sizeable, and reliable storage. If multiple editors, look into a robust SAN or NAS solution. You can check out our previous 3 part series on “choosing the right shared storage” to find one that works best for you.

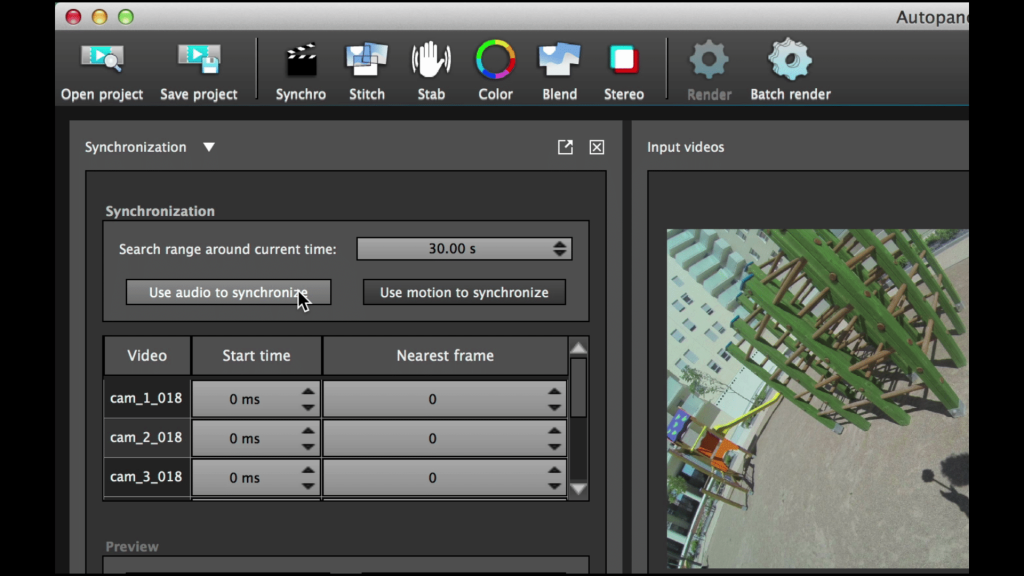

So you’ve got your clips, now you need to sync them and stitch this video together into a flat equirectangular image that you can view on your computer screen….An equirectangular image is commonly used in maps of the Earth, as we’ve tried to represent the spherical earth on a flat map since…well, forever.

Now, creating this equirectangular image is tricky. This is where the aforementioned Kolor comes into play.

Kolor can use timecode and other clues from the files to start attempting to stitch them together. More often than not, you’ll need to tweak the stitching, as we’re not at a point where it can be done 100% successfully in an automated fashion, although the all in one shoot – stitch –upload solution by Jaunt comes pretty close.

From here, you render out a single video file, and then begin the process of forming a narrative or experience inside your NLE du jour.

For editorial, this will certainly include a hefty amount of time hiding seams or talent crossing the stitch areas, reducing camera jitter, or fixing production issues from on-set by rotoscoping. Its honestly more triage than pure creativity with some of your early projects…as well as learning what kind of pacing, transitions, and text work in VR vs. old HD video.

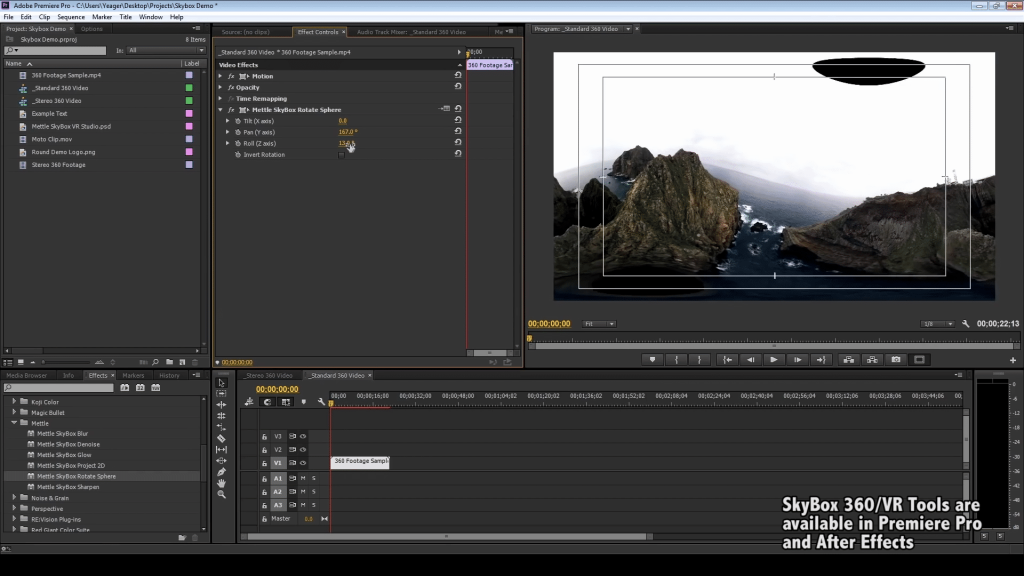

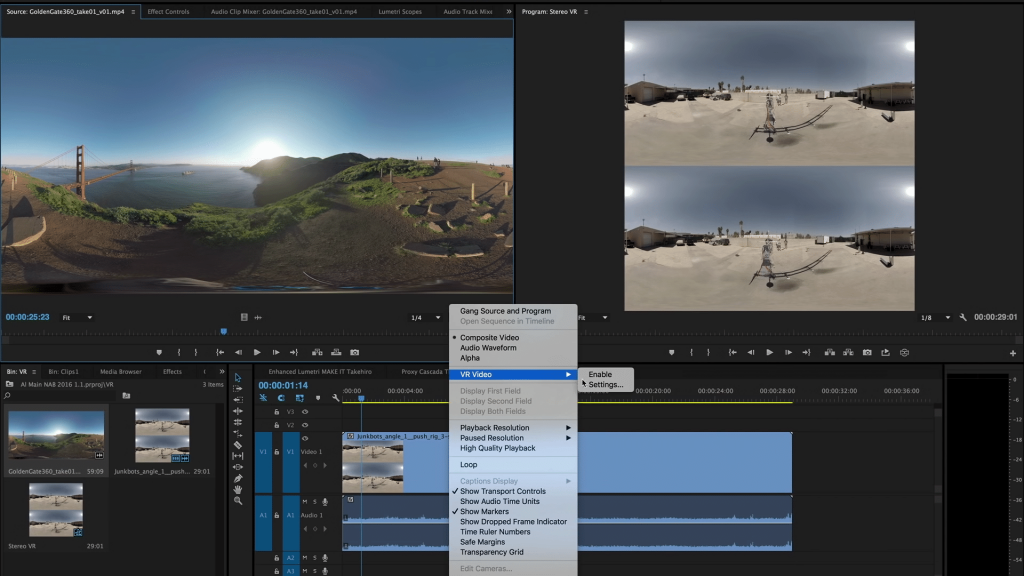

For creative editorial, more often than not, the picture editing is done in Premiere, using tools like the Skybox solutions from Mettle.

I expect Premiere usage for VR to continue given the new VR playback features inside the planned Summer 2016 update to Premiere.

That being said, Tim Dashwood‘s 360/VR plugin is pretty groovy for FCPX.

As a general rule, transcoding to a proxy format to make editorial a bit less painful is a good way to go about things. The inflated resolution from all the stitched angles can kill performance on older computers.

4. How do I view VR?

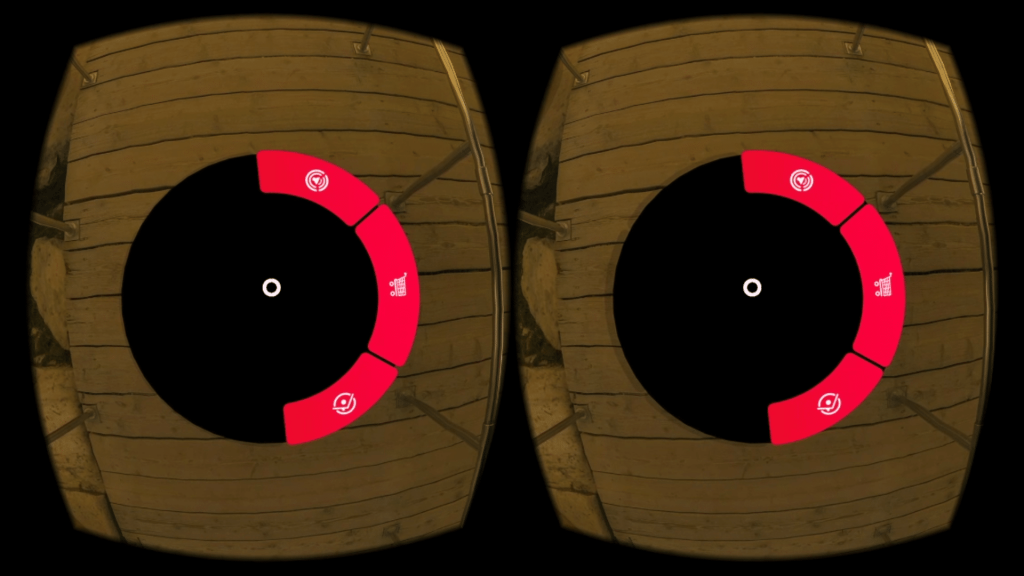

What you’re telling me you want folks to actually consume what you’re serving? You’re so demanding. We have a few ways, and all involve an HMD – or head-mounted display. Let’s start with the most common: Your smartphone.

This is the easiest way to dip your toe in the murky VR waters. Many devices, such as this View-Master here, can accommodate your cell phone – using your mobile device as the VR video player. Google Cardboard is a very popular sub $20 solution to get you started, and with a quick Amazon search, you’ll find you a ton of other very similar alternatives.

One of the major drawbacks with using smartphones for VR is effective resolution, the visual quality is severely lowered when the phone is pushed up against your face.

You can actually see the pixels on the screen – and this negates some of the realism promised to us by VR advocates. There is little doubt, however, that this issue will decrease as mobile screen technology improves.

If you wanna step up in the world, we can move to a more robust solution… That is one that is powered by a workstation. Tethered devices, like Oculus Rift, and the Sony Morpheus, rely on the horsepower that a larger computer can deliver. Of course, these HMD units are several hundred dollars and still require the computer to power them. Most likely, we’ll see this methodology find itself useful in the gaming market where you already have the console to power the headset.

Lastly, we have YouTube 360 – which, as the name implies, is YouTube but with 360 videos. You can play these on whatever device supports YouTube and 360 Videos– your mobile device or console. The YouTube 360 channel has close to 1.2 million subscribers, so the interest is definitely there.

5. What’s the future?

Ahhh yes, the question everyone has been asking. So, here’s my 2 cents.

We’ve been looking for more immersive video since the first flickering images appeared on the first makeshift CRT. Color was more real than black and white, and HD was more real than SD. VR is simply another step in that immersion evolution. Where I believe VR may be able to capitalize on this, is that not only is it more visually immerse, but unlike the linear 3D it’s commonly compared to, VR is emerging as an individual interactive experience, an almost choose-your-own-adventure in some respects. This is the kind of immersion people are looking for.

Certainly, non linear applications, like Gaming, Tourism, Health Care, Education, Marketing, and the Adult industry are easy fits, and these industries collectively earn way more money that the film and TV industry ever will. But will audiences wildly adopt headsets or tethered systems by eventually paying for it in theaters, and paying for them for their home TVs? And will the consumer demand force the hand of the studio system to mass-produce cinematic VR content to supply the consumers? I’m not sold….but it’s pretty cool anyway, huh?

Have more VR concerns other than just these 5 questions? Ask me in the Comments section. Also, please subscribe and share this tech goodness with the rest of your techie friends.

Until the next episode: learn more, do more – thanks for watching.

36 episodes